What Is a Feedforward Neural Network? A Comprehensive Guide

Introduction

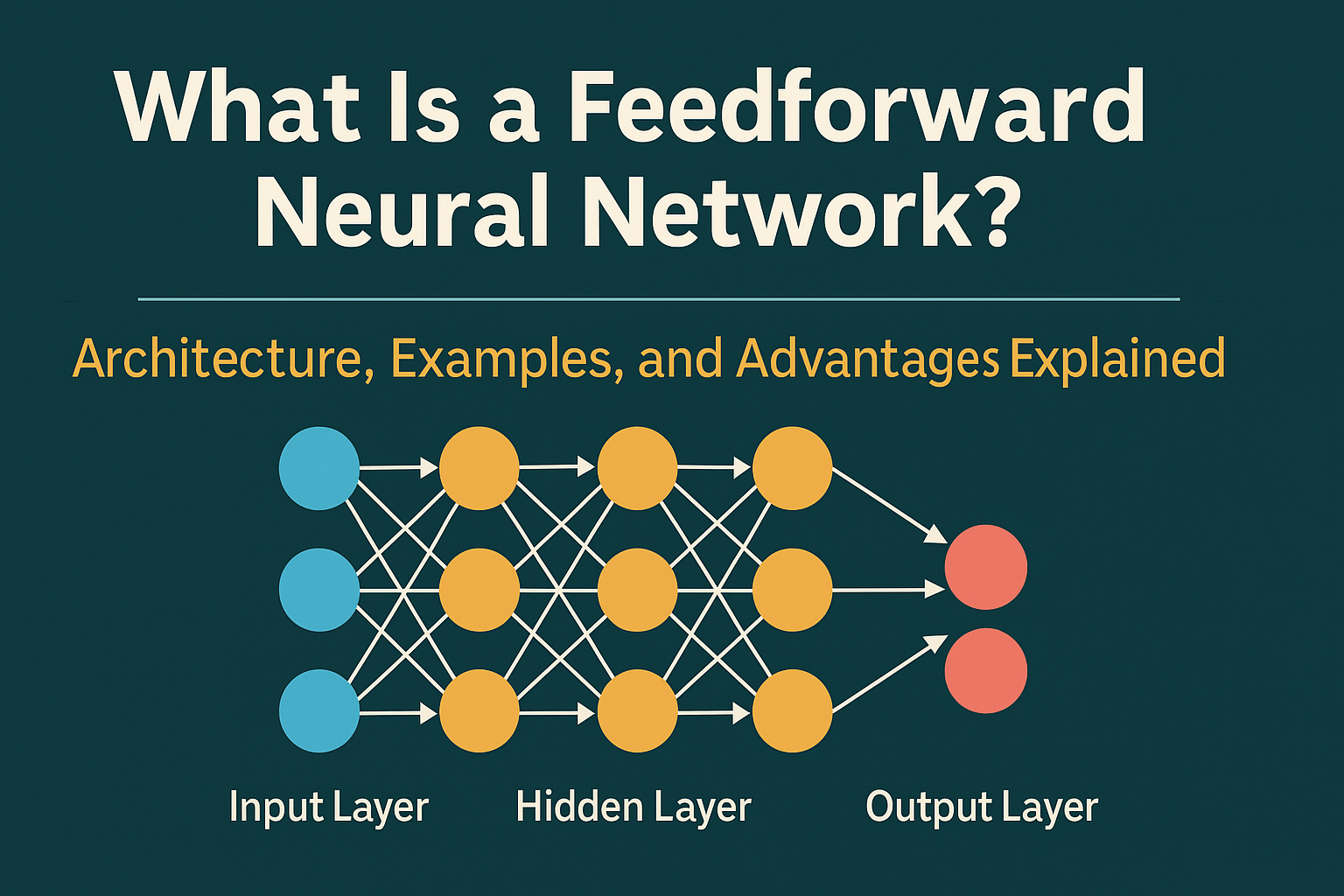

In the realm of artificial intelligence and machine learning, Feedforward Neural Networks (FNNs) stand as one of the most fundamental architectures. Characterized by a unidirectional flow of information—from input to output layers—FNNs are pivotal in tasks like image recognition, natural language processing, and predictive analytics. This guide delves into the intricacies of FNNs, exploring their history, structure, advantages, limitations, and applications.

Table of Contents

Understanding Feedforward Neural Networks

A Feedforward Neural Network is an artificial neural network where connections between nodes do not form cycles. Information moves in only one direction—forward—from the input nodes, through the hidden nodes (if any), and finally to the output nodes. There are no loops or cycles in the network. This structure is the simplest form of neural network and serves as the foundation for more complex architectures.

Historical Evolution

The concept of neural networks dates back to the 1940s with the development of the McCulloch-Pitts neuron model. In 1958, Frank Rosenblatt introduced the perceptron, a type of FNN, which laid the groundwork for future developments. Over the decades, advancements in computational power and algorithms have propelled FNNs into mainstream machine learning applications.

Architecture and Functionality

An FNN typically comprises three types of layers:

-

Input Layer: Receives the initial data.

-

Hidden Layer(s): Processes inputs through weighted connections and activation functions.

-

Output Layer: Produces the final prediction or classification.

Each neuron in a layer is connected to every neuron in the subsequent layer, and the network's learning involves adjusting the weights of these connections to minimize the error in predictions.

Real-World Applications

Feedforward Neural Networks are employed in various domains:

-

Image and Speech Recognition: Classifying images or transcribing speech.

-

Financial Forecasting: Predicting stock prices or market trends.

-

Medical Diagnosis: Identifying diseases based on symptoms and test results.

-

Natural Language Processing: Language translation and sentiment analysis.

Advantages of Feedforward Neural Networks

-

Simplicity: Straightforward architecture makes them easy to implement and understand.

-

Efficiency: Suitable for problems where data flows in one direction.

-

Versatility: Applicable to both regression and classification tasks.

-

Deterministic Output: Given the same input, they consistently produce the same output.

Limitations and Challenges

-

Inability to Handle Sequential Data: Not ideal for tasks involving time-series or sequential information.

-

Overfitting: Prone to overfitting, especially with limited training data.

-

Computational Resources: Training large FNNs can be resource-intensive.

-

Lack of Memory: Cannot retain information from previous inputs, limiting their use in certain applications.

Educational Resources and Courses

For those interested in learning more about FNNs:

-

Online Courses: Various platforms offer courses on neural networks and deep learning.

-

Academic Textbooks: Comprehensive materials covering the theoretical aspects.

-

Workshops and Seminars: Interactive sessions for hands-on experience.

Comparative Analysis: FNNs vs. Other Neural Networks

| Feature | Feedforward Neural Network | Recurrent Neural Network | Convolutional Neural Network |

|---|---|---|---|

| Data Flow | Unidirectional | Cyclical | Unidirectional |

| Memory | No | Yes | No |

| Best Suited For | Static data | Sequential data | Image data |

| Complexity | Low | High | Medium |

Case Study: Problem-Solving with FNNs

Scenario: A retail company wants to predict monthly sales based on advertising spend, seasonality, and economic indicators.

Solution: Implementing an FNN with input nodes representing each factor, the network is trained on historical data. After training, the FNN can predict future sales, aiding in inventory and marketing strategies.

Conclusion

Feedforward Neural Networks are a cornerstone in the field of machine learning, offering a straightforward yet powerful approach to modeling complex relationships in data. While they have limitations, their simplicity and effectiveness make them a valuable tool for various applications.

Frequently Asked Questions (FAQs)

What differentiates FNNs from other neural networks?

FNNs process data in one direction without loops, making them distinct from recurrent neural networks that handle sequential data.

Can FNNs handle time-series data?

FNNs are not ideal for time-series data as they lack memory of previous inputs. Recurrent Neural Networks are better suited for such tasks.

What are common activation functions used in FNNs?

Common activation functions include Sigmoid, Tanh, and ReLU, each introducing non-linearity into the network.

How do FNNs learn from data?

FNNs learn by adjusting the weights of connections between neurons to minimize the error between predicted and actual outputs, typically using algorithms like backpropagation.

Are FNNs suitable for image recognition tasks?

Yes, FNNs can be used for image recognition, although Convolutional Neural Networks are more specialized for such tasks due to their ability to capture spatial hierarchies.

FAQs

What is the main purpose of a Feedforward Neural Network?

Feedforward Neural Networks are used to map input data to output predictions. They are ideal for classification and regression tasks in machine learning.

How does a Feedforward Neural Network work?

FNNs process data in one direction—from input to output—through layers of neurons. Each layer applies transformations using weights, biases, and activation functions.

What are the key components of a Feedforward Neural Network?

Key components include the input layer, one or more hidden layers, an output layer, activation functions, and weighted connections.

Where are Feedforward Neural Networks used?

They are used in image recognition, language translation, medical diagnosis, stock prediction, and other machine learning applications.

What is the difference between Feedforward and Recurrent Neural Networks?

Feedforward Networks do not have memory or loops, while Recurrent Neural Networks retain previous input information and are better for sequential data.

Are Feedforward Neural Networks still used in modern AI?

Yes, FNNs are widely used for foundational tasks and are often a building block for more advanced networks like CNNs and RNNs.

✅ Comment Suggestion for Readers

We'd love to hear from you!

Have you ever worked with Feedforward Neural Networks? What challenges or successes have you faced?

Drop your thoughts, questions, or project ideas in the comments below—we're building a community of AI enthusiasts just like you!

Feel free to share your thoughts or ask questions in the comments below!